Information Acquisition Driven by Reinforcement in Non-Deterministic Environments

DOI:

https://doi.org/10.18034/ajtp.v6i3.569Keywords:

Non-deterministic Markov environment (NME), Reinforcement driven information acquisition (RDIA), Modeling agent-environment interaction, Q-learningAbstract

What is the fastest way for an agent living in a non-deterministic Markov environment (NME) to learn about its statistical properties? The answer is to create "optimal" experiment sequences by carrying out action sequences that maximize expected knowledge gain. This idea is put into practice by integrating information theory and reinforcement learning techniques. Experiments demonstrate that the resulting method, reinforcement-driven information acquisition (RDIA), is substantially faster than standard random exploration for exploring particular NMEs. Exploration was studied apart from exploitation and we evaluated the performance of different reinforcement-driven information acquisition variations to that of traditional random exploration.

Downloads

References

Barto, A. G., R. S. Sutton and C. W. Anderson, 1983. Neuron-like elements that can solve difficult learning control problems, IEEE Truns. Syst. Man Cybern. 13 (5): 834-846.

Baum, E. B. 1991. Neural nets that learn in polynomial time from examples and queries. IEEE Transactions on Neural Networks, 2(1):5–19.

Behnen, K. and Neuhaus, G. 1984. Grundkurs Stochastik. B. G. Teubner, Stuttgart.

Bellman, R. E. 1983. Dynamic Programming (Princeton University Press, Princeton, NJ, 1957). S. Ross, Introduction to Stochastic Dynamic Programming (Academic Press, New York, 1983).

Bertsekas, D. P. 1987. Dynamic Progrummin~: Deterministic and Stochastic Models (Prentice-Hall, Englewood Cliffs, NJ.

Bynagari, N. B. (2015). Machine Learning and Artificial Intelligence in Online Fake Transaction Alerting. Engineering International, 3(2), 115-126. https://doi.org/10.18034/ei.v3i2.566

Bynagari, N. B. (2016). Industrial Application of Internet of Things. Asia Pacific Journal of Energy and Environment, 3(2), 75-82. https://doi.org/10.18034/apjee.v3i2.576

Bynagari, N. B. (2017). Prediction of Human Population Responses to Toxic Compounds by a Collaborative Competition. Asian Journal of Humanity, Art and Literature, 4(2), 147-156. https://doi.org/10.18034/ajhal.v4i2.577

Bynagari, N. B. (2018). On the ChEMBL Platform, a Large-scale Evaluation of Machine Learning Algorithms for Drug Target Prediction. Asian Journal of Applied Science and Engineering, 7, 53–64. Retrieved from https://upright.pub/index.php/ajase/article/view/31

Bynagari, N. B., & Fadziso, T. (2018). Theoretical Approaches of Machine Learning to Schizophrenia. Engineering International, 6(2), 155-168. https://doi.org/10.18034/ei.v6i2.568

Cohn, D. A 1994. Neural network exploration using optimal experiment design. In J. Cowan, G. Tesauro, and J. Alspector, editors, Advances in Neural Information Processing Systems (NIPS) 6, pages 679–686. Morgan Kaufmann.

Fedorov. V. V. 1972. Theory of optimal experiments. Academic Press.

Ganapathy, A. (2016). Speech Emotion Recognition Using Deep Learning Techniques. ABC Journal of Advanced Research, 5(2), 113-122. https://doi.org/10.18034/abcjar.v5i2.550

Ganapathy, A. (2017). Friendly URLs in the CMS and Power of Global Ranking with Crawlers with Added Security. Engineering International, 5(2), 87-96. https://doi.org/10.18034/ei.v5i2.541

Ganapathy, A. (2018). Cascading Cache Layer in Content Management System. Asian Business Review, 8(3), 177-182. https://doi.org/10.18034/abr.v8i3.542

Holland, J. H. 1986. Escaping brittleness: the possibilities of general-purpose learning algortihms applied to parallel rule-based systems, in: Muchine Lenrnin~: An Artificial Intelligence Approach II (Morgan Kaufmann, San Mateo, CA, 1986).

Hwang, J., J. Choi, S. Oh, and R. J. Marks. 1991. Query-based learning applied to partially trained multilayer perceptrons. IEEE Transactions on Neural Networks, 2(1):131–136, 1991.

Kaelbling. L. P. 1993. Learning in Embedded Systems. MIT Press.

MacKay, D. J. C. 1992. Information-based objective functions for active data selection. Neural Computation, 4(2):550–604, 1992.

Neogy, T. K., & Bynagari, N. B. (2018). Gradient Descent is a Technique for Learning to Learn. Asian Journal of Humanity, Art and Literature, 5(2), 145-156. https://doi.org/10.18034/ajhal.v5i2.578

Neogy, T. K., & Paruchuri, H. (2014). Machine Learning as a New Search Engine Interface: An Overview. Engineering International, 2(2), 103-112. https://doi.org/10.18034/ei.v2i2.539

Paruchuri, H. (2015). Application of Artificial Neural Network to ANPR: An Overview. ABC Journal of Advanced Research, 4(2), 143-152. https://doi.org/10.18034/abcjar.v4i2.549

Plutowski, M., G. Cottrell, and H. White. 1994. Learning Mackey-Glass from 25 examples, plus or minus 2. In J. Cowan, G. Tesauro, and J. Alspector, editors, Advances in Neural Information Processing Systems (NIPS) 6, pages 1135–1142. Morgan Kaufmann.

Schmidhuber J. and Storck, J. 1993. Reinforcement driven information acquisition in nondeterministic environments. Report.

Schmidhuber. J. 1991a. Curious model-building control systems. In Proceedings of the International Joint Conference on Neural Networks, Singapore, volume 2, pages 1458–1463. IEEE press.

Schmidhuber. J. 1991b. A possibility for implementing curiosity and boredom in model-building neural controllers. In J. A. Meyer and S. W. Wilson, editors, Proc. of the International Conference on Simulation of Adaptive Behavior: From Animals to Animats, pages 222 – 227. MIT Press/Bradford Books, 1991.

Storck. J. 1994. Reinforcement-Lernen und Modell bildung in nicht-deterministischen Umgebungen. Fortgeschrittenenpraktikum, Fakult¨at f¨ur Informatik, Lehrstuhl Prof. Brauer, Technische Universit¨at M¨unchen.

Sutton, R.S. 1988. Learning to predict by the method of temporal differences, Mach. Learn. 3 (1): 9-44.

Thrun S. and M¨oller. K. 1992 Active exploration in dynamic environments. In D. S. Lippman, J. E. Moody, and D. S. Touretzky, editors, Advances in Neural Information Processing Systems (NIPS) 4, pages 531–538. Morgan Kaufmann.

Vadlamudi, S. (2015). Enabling Trustworthiness in Artificial Intelligence - A Detailed Discussion. Engineering International, 3(2), 105-114. https://doi.org/10.18034/ei.v3i2.519

Vadlamudi, S. (2016). What Impact does Internet of Things have on Project Management in Project based Firms?. Asian Business Review, 6(3), 179-186. https://doi.org/10.18034/abr.v6i3.520

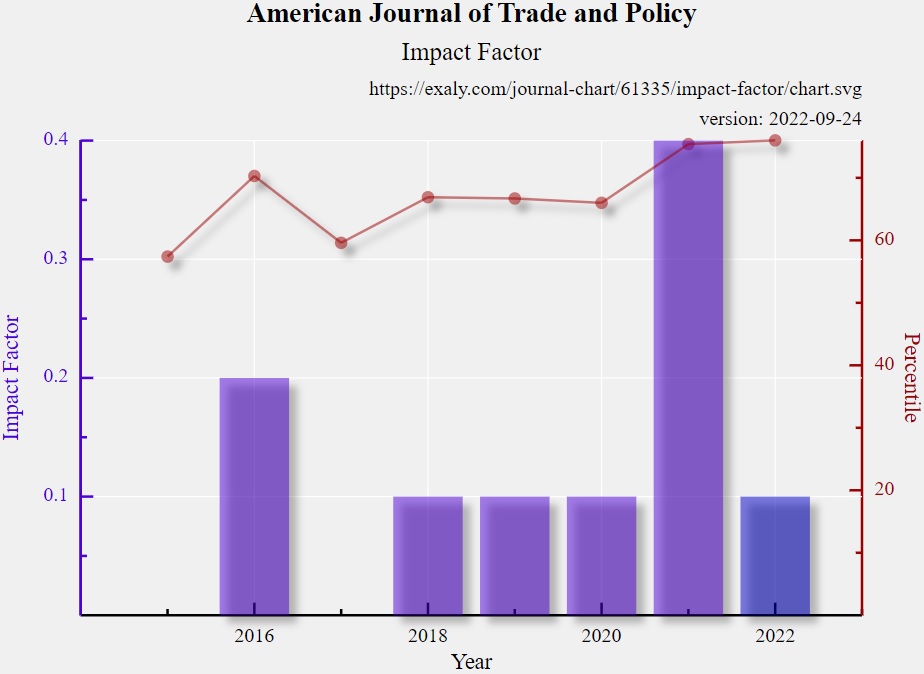

Vadlamudi, S. (2017). Stock Market Prediction using Machine Learning: A Systematic Literature Review. American Journal of Trade and Policy, 4(3), 123-128. https://doi.org/10.18034/ajtp.v4i3.521

Vadlamudi, S. (2018). Agri-Food System and Artificial Intelligence: Reconsidering Imperishability. Asian Journal of Applied Science and Engineering, 7(1), 33-42. Retrieved from https://journals.abc.us.org/index.php/ajase/article/view/1192

Watkins. C. J. C. H. 1989. Learning from Delayed Rewards. PhD thesis, King’s College, Oxford, University of Cambridge, England.

Whitehead S. D. and Ballard, D. H.. 1991.A study of cooperative mechanisms for faster reinforcement learning, Technical Report 365, Computer Science Department, University of Rochester, Rochester. NY.

Williams, R. J. 1986. Reinforcement learning in connectionist networks, Technical Report ICS 8605, Institute for Cognitive Science, University of California at San Diego.

--0--

Downloads

Published

Issue

Section

License

American Journal of Trade and Policy is an Open Access journal. Authors who publish with this journal agree to the following terms:

- Authors retain copyright and grant the journal the right of first publication with the work simultaneously licensed under a CC BY-NC 4.0 International License that allows others to share the work with an acknowledgment of the work's authorship and initial publication in this journal.

- Authors are able to enter into separate, additional contractual arrangements for the non-exclusive distribution of the journal's published version of their work (e.g., post it to an institutional repository or publish it in a book), with an acknowledgment of its initial publication in this journal. We require authors to inform us of any instances of re-publication.